Abstract

There is a gap between people’s online sharing of personal data and their concerns about privacy. Till now, this gap is addressed by attempting to match individual privacy preferences with service providers’ options for data handling. This approach has ignored the role different contexts play in data sharing. This paper aims at giving privacy engineering a new direction putting context centre stage and exploiting the affordances of machine learning in handling contexts and negotiating data sharing policies. This research is explorative and conceptual, representing the first development cycle of a design science research project in privacy engineering. The paper offers a concise understanding of data privacy as a foundation for design extending the seminal contextual integrity theory of Helen Nissenbaum. This theory started out as a normative theory describing the moral appropriateness of data transfers. In our work, the contextual integrity model is extended to a socio-technical theory that could have practical impact in the era of artificial intelligence. New conceptual constructs such as ‘context trigger’, ‘data sharing policy’ and ‘data sharing smart contract’ are defined, and their application is discussed from an organisational and technical level. The constructs and design are validated through expert interviews; contributions to design science research are discussed, and the paper concludes with presenting a framework for further privacy engineering development cycles.

Introduction

People who are concerned about privacy do not necessarily make choices about data sharing reflecting the gravity of their concerns. This gap defines the ‘privacy paradox’, observed in a number of studies (Baruh, Secinti, & Cemalcilar, 2017; Norberg, Horne, & Horne, 2007; Taddei & Contena, 2013). In real life, intentions only explain part of our behaviour (Sheeran, 2002). In online practices, this is demonstrated by the fact that most of us use popular online search engines, well knowing the ‘free services’ are paid for by sharing our personal data. The gap to be concerned about, however, is not that our actions do not follow our intentions, but the fact that available privacy solutions are so far behind our online practices. We share an unprecedented amount of personal data aligning our lives to data-driven smart cities, smart public services, intelligent campuses and other online practices utilising artificial intelligence (AI). We know little about how this data is used. When pushing back, for example using the European General Data Protection Regulation (GDPR) to stop blanket acceptance of opaque privacy policies, we only seem to get more obfuscation, having to fight pop-up windows asking for permission to use our private data for every new site to be accessed. To close the gap and prevent ‘privacy fatigue’ (Choi, Park, & Jung, 2017), we need better privacy solutions, but both the research and design community are struggling to see where the solutions should come from.

The inventor of the world wide web, Tim Berners-Lee, admitted in 2017 that ‘we’ve lost control of our personal data’ (Berners-Lee, 2017). This paper is premised on what some may characterise as a defeatist position on data sharing: We will not be able to scale back sharing of personal data, no matter how much we appeal to the GDPR principles of purpose limitations and data minimization (EU, 2012). The craving for data exposing our behaviour as consumers, citizens and persons caring for our health and cognitive development is already strong (Mansour, 2016). And it is being strengthened by the AI arms race, where the fierce competition lessen the appetite to address contentious AI issues, such as data privacy, public trust and human rights related to these new technologies (Nature, 2019). The challenge needs to be addressed by stepping up efforts in privacy engineering searching for more adequate solutions to manage personal data sharing in a world of digital transformation.

This paper aims at advancing privacy engineering through contributions addressing semantic, organisational and technical aspects of future solutions. In the ‘Background’ section, we pinpoint the weaknesses of the current discourse on privacy and point to a better understanding of context as a fruitful direction of development. In the following sections, we construct conceptual artefacts and draw up designs that may support digital practices in a society embracing big data and more and more use of artificial intelligence.

Background

In this paper, we want to advance the field of privacy engineering, defined by Kenny and Borking as ‘a systematic effort to embed privacy relevant legal primitives [concepts] into technical and governance design’ (Kenny & Borking, 2002, p. 2). We would argue that not only legal primitives need to be embedded, but realise that adding philosophical, social, pedagogical and other perspectives make privacy engineering utterly complex. No wonder Lahlou, Langheinrich, and Rucker (2005) found that engineers were very reluctant to embrace privacy: Privacy ‘was either an abstract problem [to them], not a problem yet (they are ‘only prototypes’), not a problem at all (firewalls and cryptography would take care of it), not their problem (but one for politicians, lawmakers or, more vaguely, society) or simply not part of the project deliverables’ (Lahlou et al., 2005, p. 60). When the term “privacy” is so often misunderstood and misused in human–computer interaction (HCI) (Barkhuus, 2012), there is a need to converge on a subset of core privacy theories and frameworks to guide privacy research and design (Badillo-Urquiola et al., 2018), taking into account the new requirements of data-driven society (Belanger & Crossler, 2011).

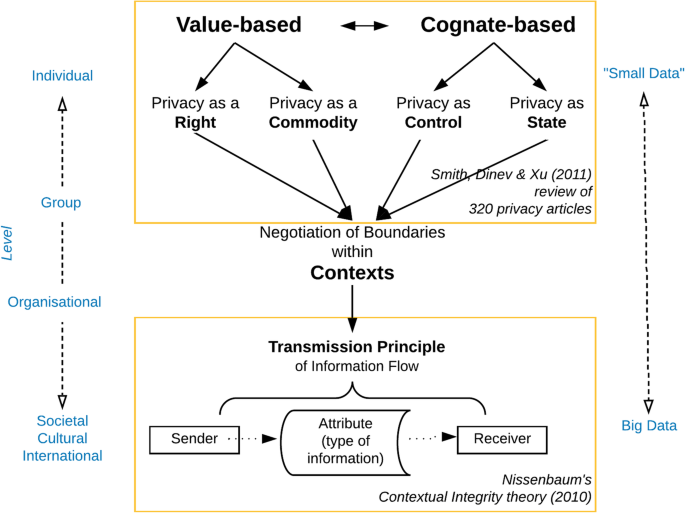

Figure 1 gives an overview of how privacy theories have developed from mainly focusing on the individual handling ‘small data’ to dealing with data sharing in group and societal settings, where new technologies using big data set the scene. In this paper, we see the development of a contextual approach to privacy as necessary to make progress within privacy engineering.

In their interdisciplinary review of privacy-related research, Smith et al. (2011) found that definitional approaches to general privacy could be broadly classified either as value-based or cognate-based (the latter related to the individual’s mind, perceptions and cognition). Sub-classes of these definitions saw privacy as a right or a commodity (that could be traded for perceived benefits), or privacy as individual control of information, or as a state of limited access to information. The problem with these theories is their lack of explanatory power when it comes to shed light on the boundaries drawn between public and private information in actual online practices in our digital age (Belanger & Crossler, 2011; Smith et al., 2011). In Fig. 1, we have indicated that when met with challenges from group-level interactions in data-rich networked environments, both value-based and cognate-based theories will be drawn towards contextual perspectives on how information flows. We would claim that when boundaries between private and shared information are negotiated—often mediated by some ICT tool—the perspectives from the individual privacy theories may play an active role. There will still be arguments referring to individual data ownership and control, data sharing with cost-benefit considerations and trade-offs or the ability to uphold solitude, intimacy, anonymity or reserve (the four states of individual privacy identified by Westin (2003). However, these perspectives will serve as a backdrop of deliberations that require another set of privacy constructs, for which context will serve as the key concept.

It may be objected that to highlight context may just be to exchange one elusive concept (privacy) with another borderless concept (context). Smith et al. (2011) were not at all sure that context-driven studies may produce an overall contribution to knowledge, ‘unless researchers are constantly aware of an over-arching model’ (ibid., p. 1005). To contribute to an understanding of privacy context, we have pointed to the theory of contextual integrity (CI) as a candidate for further development (see Fig. 1). In the following, we introduce the CI theory, focussing on how this theory’s concept of context is to be understood.

The contextual integrity theory

Over the last 15 years, CI has been one of the most influential theories explaining the often conflicting privacy practices we have observed along with the development of ubiquitous computing. When Helen Nissenbaum first launched CI, she used philosophical arguments to establish ‘[c]ontexts, or spheres, [as a] a platform for a normative account of privacy in terms of contextual integrity’ (Nissenbaum, 2004, p. 120). The two informational norms she focussed on were norms of appropriateness and norms of information flow or distribution. ‘Contextual integrity is maintained when both types of norms are upheld, and it is violated when either of the norms is violated’ (ibid, p. 120).

Privacy norms are not universal, ‘contextual integrity couches its prescriptions always within the bounds of a given context’ (Nissenbaum, 2004, p. 136). Non-universal norms may seem like an oxymoron, as a norm is supposed to cover more than one case. What role does CI give to context is a key question we see the originator of the theory grapples with in the 2004 article. ‘One of the key ways contextual integrity differs from other theoretical approaches to privacy is that it recognises a richer, more comprehensive set of relevant parameters’, Nissenbaum (2004, p. 133) states, reflecting on her application of CI on three cases (government intrusion, access to personal information and to personal space) that has dominated privacy law and privacy policies in the USA. However, access to more detail—a richer set of parameters—does not alter the way traditional privacy approaches have worked, implying violation of privacy or not from the characteristics of the setting matched against individual preferences. Barkhuus observes as follows:

It (..) appears rather narrow to attempt to generate generalized, rule-based principles about personal privacy preferences. Understanding personal privacy concern requires a contextually grounded awareness of the situation and culture, not merely a known set of characteristics of the context. (Barkhuus, 2012, p. 3)

This questions on how context should be understood in relation to preference—as something more than characteristics of individual preferences—represents a research gap that will be addressed in this paper as it goes to the heart of the privacy discourse: Where are norms of the appropriateness of the exchange anchored—internally or externally—in the value system of the individual or in the negotiated relationships to others in situations?

First, we will explore how context is to be understood, before we return to the question of how preference and context are related.

Understanding context

Context is the set of circumstances that frames an event or an object (Bazire & Brézillon, 2005). This generally accepted meaning of the term is not very helpful when wanting to use it in a specific discipline where we need a clear definition. There are, however, many definitions of context to choose from. Bazire and Brézillon (2005) collected a corpus of more than 150 definitions, most of them belonging to cognitive sciences (artificial intelligence being the most represented area). In human cognition, they note, there are two opposite views about the role of context. The first view considers cognition as a set of general processes that modulate the instantiation of general pieces of knowledge by facilitation or inhibition. In the second view (in the area of situated cognition), the context has a more central role as a component of cognition by determining the conditions of knowledge activation as well as the limit of knowledge validity.

These two opposite views have parallels with our question above about the basis of a decision on the appropriateness of data exchange. The context may have an internal nature or an external one. ‘On the one hand, context is an external object relative to a given object, when, on the other hand, context belongs to an individual and is an integral part of the representation that this individual is building of the situation where he is involved. According to this second viewpoint, ‘context cannot be separated from the knowledge it organises, the triggering role context plays and the field of validity it defines”. (Bazire & Brézillon, 2005, citing Bastien, 1999).

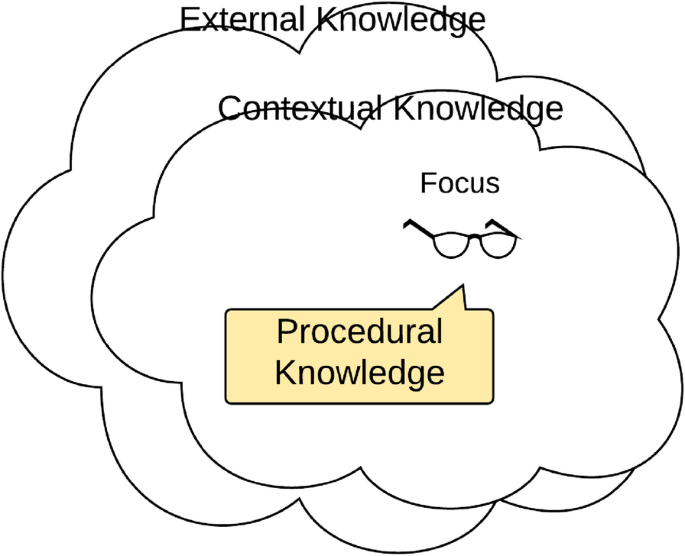

In the context of privacy deliberations, in this paper, we would follow the second viewpoint focussing on knowledge organisation. Context is itself contextual; context is always relative to something—described as the focus of attention. For a given focus, Brézillon and Pomerol (1999) consider context as the sum of three types of knowledge. Contextual knowledge is the part of the context that is relevant for making decisions, and which depends on the actor and on the decision at hand. By definition, this creates a type of knowledge that is not relevant, which Brézillon and Pomerol (1999) call external knowledge. However, what is relevant or not evolves with the progress of the focus, so the boundaries between external and contextual knowledge are porous. A subset of the contextual knowledge is proceduralised for addressing the current focus. ‘The proceduralized context is a part of the contextual knowledge that is invoked, assembled, organised, structured and situated according to the given focus and is common to the various people involved in decision making’ (Brézillon, 2005, p. 3), see Fig. 2.

Different kinds of context knowledge (based on Brézillon & Pomerol, 1999)

The first point of view on contexts discussed above—modulation of an external object by facilitation or inhibition—has similarities with what we could call a preference approach (matching individual preference characteristics with alternative actions). In the next sections, we will explore limitations to this approach reviewing literature from the field of personalised learning and privacy standardisation.

Limitations of a preference approach

The individual privacy preference approach has similarities with the approach of personalised learning (Campbell, Robinson, Neelands, Hewston, & Massoli, 2007), which has been critiqued for lack of nuanced understanding for how the needs of different learners can be understood and catered for in school. Prain et al. (2012) argues that the critical element in enacting personalised learning is the ‘relational agency’ operating within a ‘nested agency’ in the development of differentiated curricula and learners’ self-regulatory capacities.

The construct of ‘nested agency’ recognises that the agency of both groups [teachers and learners] as they interact is constrained by structural, cultural and pedagogical assumptions, regulations, and practices, including prescriptive curricula, and actual and potential roles and responsibilities of teachers and students in school settings. (Prain et al., 2012, p. 661)

The main lesson learnt from the well-researched field of personalised learning is the need for a better understanding of the contexts, in which the learning occurs.

A special group of learners are persons with disabilities. ISO/IEC published in 2008 the Access for All standard aiming ‘to meet the needs of learners with disabilities and anyone in a disabling context’ (ISO, 2008). The standard provided ‘a common framework to describe and specify learner needs and preferences on the one hand and the corresponding description of the digital learning resources on the other hand, so that individual learner preferences and needs can be matched with the appropriate user interface tools and digital learning resources’ (ISO, 2008). The Canadian Fluid Project has proposed to use the same framework to define Personal Privacy Preferences, working as ‘a single, personalised interface to understand and determine a privacy agreement that suits the function, risk level and personal preferences’, so that, ‘private sector companies would have a standardised process for communicating or translating privacy options to a diversity of consumers’ (Fluid Project, n.d.).

Using the ISO 24751:2008 framework to define personal privacy preferences implies acceptance of the standard’s definition of context as ‘the situation or circumstances relevant to one or more preferences (used to determine whether a preference is applicable)’. Then privacy is seen as a characteristic of the individual, rather than a relationship between different actors mediated by contexts. The Canadian project proposes to ‘leverage ISO 24751* (Access for All) to discover, assert, match and evaluate personal privacy and identity management preferences’ (Fluid Project, n.d.). However, the challenge is not to facilitate matching between predefined preferences and alternative representations of web content (which was the focus of the Access for All standard); the challenge is to orchestrate dynamic privacy policy negotiations in the particular contexts of a great number of online activities. If one sees only individuals with needs, one tends to overlook other factors, like culture, social norms and activity patterns embedded in complex settings.

To make context ‘a first-class citizen’ (Scott, 2006) in privacy engineering CI needs to be developed from a normative ex post theory to a theory positioned more in the middle of privacy negotiations supported by information technology. In the next subsection, we will see how the theoretical base of CI has been broadened by Helen Nissenbaum and different research groups.

Formalising CI

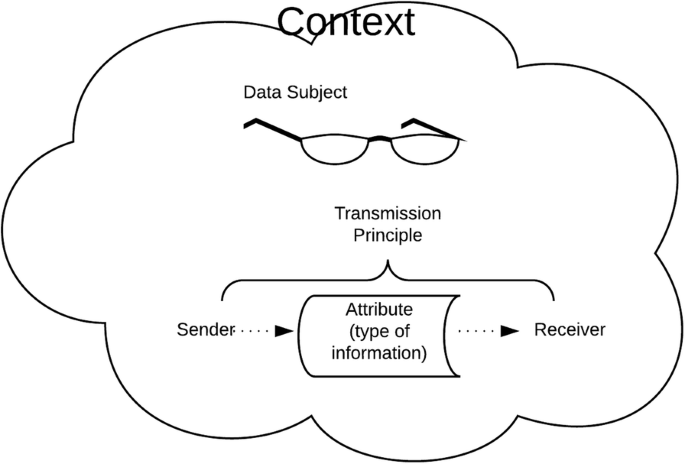

Barth, Datta, Mitchell, & Nissenbaum, 2006 made a first attempt to make a formal model of a fragment of CI, focussing on “communicating agents who take on various roles in contexts and send each other messages containing attributes of other agents” (Barth et al. (2006), p. 4). In 2010, Nissenbaum provided a nine-step decision heuristic to analyse new information flows, thus determining if new practice represents a potential violation of privacy. In this heuristic, she for the first time specified precisely which concepts should be defined to fulfil a CI evaluation, see Fig. 3 (Nissenbaum, 2010, pp. 182-183).

The theory of contextual integrity (after Nissenbaum, 2010)

From this heuristic, authors in collaboration with Nissenbaum have developed templates for tagging privacy policy descriptions (e.g. Facebook’s or Google’s privacy policy statements) to be able to analyse how these documents hold up to the CI theory (Shvartzshnaider, Apthorpe, Feamster, & Nissenbaum, 2018).

CI describes…

INNOVATION AND DATA PRIVACY ARE NOT NATURAL ENEMIES: INSIGHTS FROM KOREA’S EXPERIENCE

The following is a guest post to the FPF blog authored by Dr. Haksoo Ko, Professor at Seou…